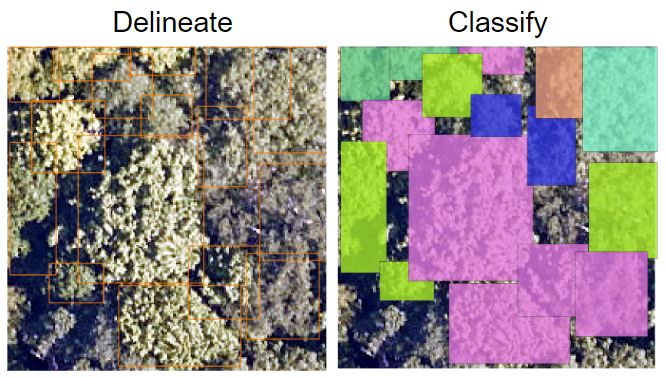

A data science competition to delineate and classify individual tree crowns in high-resolution remote sensing data.

Understanding the number, size, and species of individual trees in forests is crucial to mitigating the effects of climate change, managing invasive species, and monitoring shifting land use on natural systems and human society. However, collecting data on individual trees in the field is expensive and time consuming, which limits the scales at which this crucial data is collected.

Remotely sensed imagery from satellites, airplanes, and drones provide the potential to observe ecosystems at much larger scales than is possible using field data collection methods alone And people from various fields and backgrounds (data and computer scientists, ecological remote sensing specialists, computational biologists) can advance this field more quickly than groups working on their own.You are invited to participate in the IDTReeS 2020 Competition to advance and compare our methods for mapping individual trees. Enter your email to be notified of updates and keep reading below for more details.

What is it?

- Data science competition to develop and/or test algorithms on two tasks (1) delineation of tree crowns and (2) classification of their species identity on airborne remote sensing images

- Participants can engage in one or both of the competition tasks

- Uses 0.1 – 1 m resolution remote sensing data (RGB, lidar and hyperspectral) from three forest sites of the National Ecological Observatory Network (NEON; www.neonscience.org/data-collection/airborne-remote-sensing)

- Allows for cross-site testing to answer the question of; how well do algorithms developed at one site work at other sites?

What do I have to do?

- Overall: based on training data from 2 sites, develop algorithms that can map tree crown boundaries and species identities from airborne image data at 3 NEON sites.

- Crown delineation task: training and output data are boundary boxes that delineate the boundaries of individual tree crowns

- Species classification task: training data are bounding boxes with species already identified. Output data will be classification of species on bounding boxes of unknown species identity

- Cross-site comparison: performance will be tested on the same sites for which the training data are provided as well as one additional site from which none of the training data was provided

- Opportunity to publish results in a collection of papers from the competition (PeerJ Collection of papers)

2020 results

The 2020 competition was completed in August. The winning teams and methods have been announced on the 2020 results page. A collection of papers detailing the competition and the methods will be submitted to PeerJ in 2021. Check back for more updates on these manuscripts.

Ready to participate?

- Enter your email to register. New participants welcomed until the final submission date.

- Read the instructions and supplementary information

- Download the latest version of the train and test data

- As you develop your methods:

- Send us your questions using this form

- Read our responses to participant questions in the FAQ document

- Self-evaluate on the training data using the evaluation code

- Provide your final submission by the submission deadline with this submission form

Timeline

In recognition that for many people, schedules, duties, and priorities have changed over the past few months, we have modified the timeline of this competition. The list below has our current dates for data release and deadlines. Additional updates will be announced on our email list.

- June 1: test data released and submission process open for live evaluations

- August 1: deadline for participants to submit data for evaluation

- August 8: announcement of results/winner and organization of manuscript collection

- August 15: deadline for participants to sign up for manuscript submission

Want to learn more?

We ran a similar competition in 2017. The results of the 2017 competition have now been published as a paper collection. The main results are described in the central paper and the details are available in papers by the participants in the collection. The main score comparisons for the primary tasks are listed on the 2017 results page